The year that was

and whats next!

For those of you deep in the year-end celebration, it may come as a shocker that my plan for today was to continue working on some of the new features we have planned for Zingg. Going out or meeting people is ruled out anyways due to the sudden surge in Covid cases. Building stuff is fun, so I do not feel the need for a break right now. However, I realized today that there is something special about the last day of the year. One can try all one might to keep one’s head down and focus on the urgent, but the chirpiness around forces you to take stock, look back and also think about what’s next. So here I am, reminiscing on the year gone by!

The year started with me working heads down on some of the core algorithms for entity resolution in Zingg. Entity Resolution in a nutshell is saying which records belong to the same real-world person, or organization, or product. Our operational systems are surprisingly full of multiple records with salutations, abbreviations, omissions, typographical differences, missing fields, and other variations. Without resolving these multiple records to the actual customer or supplier, doing even simple analytics like customer lifetime value or average repeat orders is error-prone. If your operational systems have this problem, you can build a warehouse or a datalake but you can not trust the insights it gives.

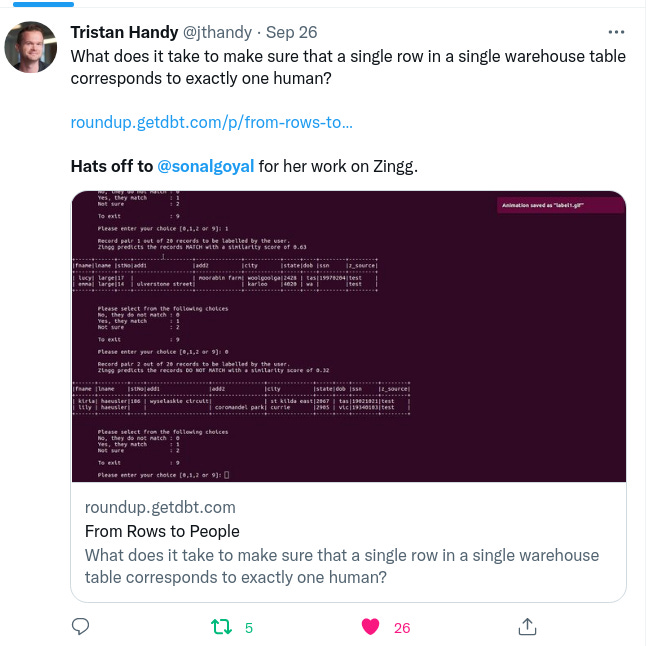

Given that entity resolution is equally needed for customers, suppliers, addresses, properties, organizations, products… a learning-based approach that could cater to different entities appealed to me. With no academic background in machine learning systems, designing a generic machine learning product was not trivial at all. As I worked through some of these challenges, one big question arose. Where would Zingg get the training data from which the patterns and rules for data matching can be learned? This led to Zingg’s interactive console labeler, which shows pairs of records that can be marked as matches or non-matches to train the system. With the labeler and the rest of Zingg, someone who has no background in machine learning but understands the data can easily build production grade machine learning models.

Somewhere in September, I felt I was ready to open it all up. Would anyone notice? Will people find Zingg useful? I had my share of the usual jitters, excitement, and anticipation! Kind of like a thriller, but you are the one who is about to be murdered :-)

This tweet from the dbt Labs Founder, Tristan Handy answered it all for Zingg and me. There is no better endorsement of the problem we are solving than an acknowledgment from people in the thick! And dbt is right at the center of the entire Modern Data Stack movement!

Tristan’s blog covering Zingg has been the highlight of the year for Zingg and me.

The 3.5 months since the open-source have been great. There are 41 community members on Slack and 343 stars on Github. The number of unique clones in December itself has surpassed 100. Some early feedback has started coming in.

Overall, it feels like a year well spent!!

The belief behind Zingg is simple. To make sure that our effort in building data warehouses and datalakes is amply rewarded with the correct insights. To enable deeper relationships with customers and related parties by understanding our first-party data correctly. To enrich these relationships through external data points. To easily build systems that need data matching.

At a deeper level, Zingg’s mission is to get more of us data folks with no background in machine learning to easily train and build ML models. To specify domain knowledge in whatever way they prefer - sql, java, python and let the system figure out the rest. For those with ML expertise, Zingg’s mission is to free them of the humdrum of entity resolution and let them move on to higher-order problems worthy of their attention.

Philosophically, my belief is that we need tools that enable us to spend our time wisely. Tools that help us achieve more individually and collectively. They should enable us to spread our wings, explore unchartered territory, and get more done. I am going to double down on this belief in the coming year.

Happy new year everyone. To 2022!